Back in September, the team at Government Executive did a fantasy football – inspired take on government leadership. Entitled the Dream Team, the short piece highlights eight bureaucrats found throughout the government (or formerly in the government in my case). To use the words of the GovExec authors, “here are some of the folks we’d love to see on any leadership team tackling the kinds of big problems only government can address.”

Collectively, this group has a range of skills: finance, acquisitions, program and project management, legal, IT security, HR, software platform development, disaster recovery…and somewhat curiously, two geographers.

I’m humbled to have been included on this list, particularly given the accomplishments of the other geographer, Mike Byrne. That said, what I find more intriguing is how two geographers made this list to begin with. What is it about geography that is gaining the attention of management and leadership journalists? Why would they think these are the skills needed on government teams to solve big problems? And why now?

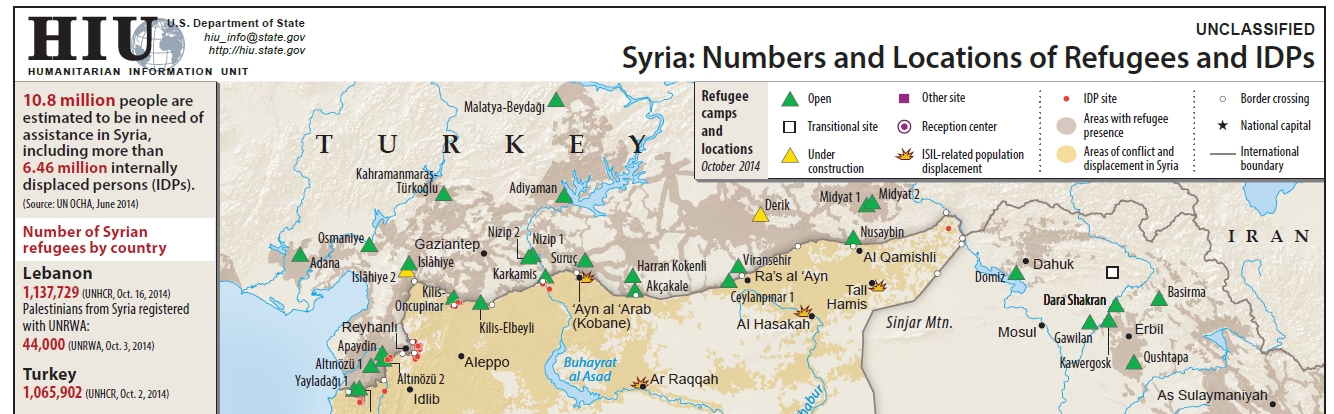

Starting with the question of awareness, its clear that digital geography and maps have captured the public’s attention. The combination of freely available Global Positioning System (GPS), ubiquitous data networks, high resolution imagery, increasingly powerful mobile devices, and interoperable web services has fundamentally changed how the average person interacts with maps and geographic data. In the ten years since the introduction of Google Earth, the expectation of the average person is now to have complex, updated, descriptive, interactive maps at their disposal anytime, anywhere, and on any device. This shift is nothing short of revolutionary.

And while the glitz of slippy maps and spinning globes has brought the public back to maps, there is more to the story of why geographers are critical elements of multidisciplinary leadership teams. I believe there are two key characteristics that set geographers apart: Information Integration and Problem Solving. The first is the the capacity of the spatial dimension to integrate information across disciplines, and the second is how spatial logic combined with digital tools can predict, analyze, and visualize the impact of a policy decision.

Begin TL;DR

Let’s begin with the idea of information integration. At its core, Geography is a spatial science that utilizes a range of qualitative, descriptive, quantitative, technical, and analytical approaches in applications that cross the physical sciences, social sciences, and humanities. It may seem trite to say, but everything happens somewhere, so anything that involves location can be studied from a geographic perspective. Where most disciplines have fairly defined domains of knowledge, Geography, and its focus on the spatial dimension, cuts laterally across these domains.

The cross cutting nature of location is the fundamental reason why Geography is in a resurgence. Geographic location provides the mechanism to integrate disparate streams of information. Data that relates to one discipline can be linked to other data simply by its location. As a conceptual framework, Geography is an integrative lens, i.e., what are the forces that interact to define the characteristics of this location, and when combined with Geographic Information Systems (GIS) technology, spatial location acts as the relational key used to link tables of information together in a database. It is this union of mental framework and technology that provides Geography a unique capacity for information integration.

However, information is typically aggregated for a purpose, the goal is to solve a problem, find an answer, understand a situation, and Geography offers unique tools for that as well. All problem solving is about breaking a complex problem into divisible, solvable units. For a geographer this starts with the application of spatial logic to the problem; this means when solving a multivariate problem, a geographer will accept the spatial distribution and spatial associations of a phenomenon as primary evidence, and then seek to discover the processes that led to that distribution. This contrasts most disciplines, where process based knowledge on individual characteristics are combined, then tested against the spatial distribution . The elevation of spatial logic over process logic is the key differentiator between a geographer and a domain-specific analyst.

Bringing spatial logic into the technological domain relies upon Geographic Information Science (GISc) to provide the conceptual framework, algorithms, and specialized tools needed to analyze data encoded with location information. It is the analytical power of these functions, integrated with the data collection, storage, retrieval and dissemination tools of GIS, that form the toolkit for problem solving used by geographers. Additionally, cartographic visualization provides a mechanism to encode these data and analysis so that complex spatio-temporal relationships can be displayed and quickly understood. Whether it is data exploration, sense making, or communicating results, displaying geographic data in map form is a tremendous advantage over text. And now with the web, cartography can be interactive, cross-multiple scales, and be dynamic through time.

So, why is now the time for geographers? The answer is threefold, first it has to do with the scale and complexity of problems we are facing, second is the amount and variety of information that can be applied to the problems, and third is the maturity of the digital geographic tools. Finding solutions to deal with climate change, energy, sustainable development, disaster risk reduction, and national security will require interdisciplinary approaches that are firmly grounded in the spatial dimension. With the transition to a digital world, society (governments included) finds itself in a state of information overload. In this world where data is plentiful, value shifts from acquiring data to understanding it. Geography as a discipline, and geographers equipped with a new generation of spatial information technology, are well adapted to this new paradigm.

If done correctly, the modern geographer has broad academic training across a range of disciplines, uses the spatial perspective as a means of information integration and analysis, and is facile with the digital tools needed to collect, store, analyze, visualize, and disseminate geographic information and analysis. Combine these skills with management and leadership training, and the geographer becomes a portent fusion. One that I think this article correctly identifies as critical to the leadership teams needed to solve big problems.